No doubt we need better ways to test for consciousness, as much as we need better definitions. From the point of view of science, Consciousness is a controversial and quite elusive phenomenon and trying to build good tests is actually a crucial part of the quest to understand it. Assessment and definition are always inseparable (otherwise, we wouldn’t know what we are testing).

Effectively testing for consciousness in humans is something we take for granted, specially when we put our trust in anesthesiologists right before going into surgery, or when ER physicians perform a Glasgow coma test to assess the level of consciousness of a patient. Assessing the level of consciousness in human subjects is usually a non problematic or challenging task (except from locked-in syndrome patients and the like). However, when we consider the vast number of other sorts of organisms, such as other animals, plants and machines things get much more complicated. The problems is essentially the same for all creatures, and scientifically speaking it involves the very same problem. However, there is a huge difference when we speak about humans: for humans we do assume consciousness as a legitimate feature of the living organism.

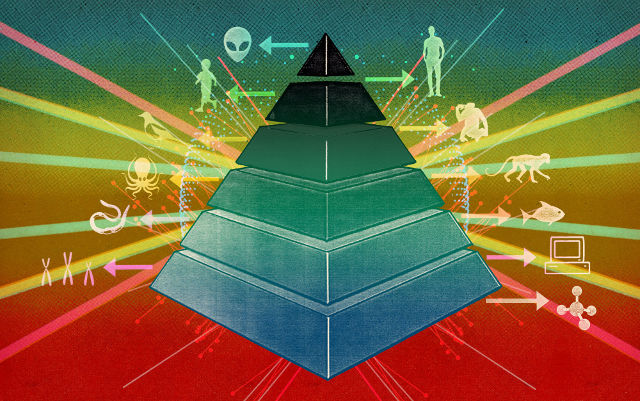

So, what do we do if we want to come up with a universal test for consciousness? One that might be applicable to virtually any creature possible, including biological organisms, artificial machines or cyborgs? What features or what measurements do we need to do? In other words, what is consciousness made of, so we can measure it?

As neatly described by Musser in his aeon essay (Consciousness Creep. Our machines could become self-aware without our knowing it) we are making efforts, and hopefully some progress, into building new ways to test for consciousness. ConsScale is an example of this quest for both understanding and measuring consciousness.

Musser presents in his essay several of the tests that have been recently proposed, explaining the vision and position of the authors, including my own. It’s interesting to see how different approaches for testing imply different assumptions about what consciousness is. Tononi’s approach is based on the Information Integration theory, ConsScale is based on cognitive development, Haikonen stresses the importance of inner talk, Schwitzgebel raises the question of consciousness in groups (super-organisms). Perhaps we need to look for a new approach able to deal with all these aspects within the same framework.

Hi Raúl,

It is so nice to see you active again in this most interesting field.

In my book “Consciousness and Robot Sentience” (Haikonen P, World Scientific 2012) I state that there is only one requirement for true phenomenal consciousness and inner speech is not that one. The real requirement is the presence of the inner phenomenal appearance or feel of our sensory percepts. Instead of perceiving neural firings we perceive what we believe to be the qualities of the perceived world and our body including pain and pleasure. Likewise we perceive our inner speech as a virtually heard speech. The presence of inner reportable phenomenal appearance is the only relevant requirement, but also the most difficult to prove to be present in other beings except ourselves. The same goes for robots. I believe that this problem cannot be solved by philosophy alone and therefore I am doing these experiments with my neural XCR-1 robot. The presence of inner speech is of course one possible indication of inner phenomenal appearances in systems other than digital computers.